The following documentation is a part of the Graylog API Security product (formerly Resurface) technical documentation knowledge base. As we continue to improve our documentation offerings, please note that some articles or pieces of content may change. If you have any questions for the Graylog documentation team, please feel free to reach out to us via the community’s Documentation Campfire forum.

What is Graylog API Security?

Graylog API Security (formerly known as Resurface) captures real API traffic to detect real attacks, leaks, and other threats to your APIs. Our software discovers your APIs and the risks from their use by legit customers, malicious attackers, partners and insiders. With Graylog API Security, you'll be able to:

- Easily capture API calls at scale to your own first-party database.

- Immediately identify attacks and failures for REST and GraphQL APIs.

- Continuously scan API calls for quality and security risks.

- Threat hunt using any combination of request and response data elements.

- Retroactively search for identified zero-day threats and exploits.

- Create and share custom signatures without having to write any code.

- Integrate and automate using webhook alerts, SQL queries and data exports.

- Deploy in minutes to any local or cloud-based Kubernetes environment.

- Scale by adding Trino worker nodes or Iceberg storage (on S3 or Minio).

- Configure user SSO with OAuth, JWT, LDAP and Kerberos.

- Import and export API calls and signature definitions.

- Protect user privacy with logging rules and role-based controls.

Running on AWS

Resurface installs on any Kubernetes cluster with a single helm command, and uses optimized defaults when installing on Amazon EKS.

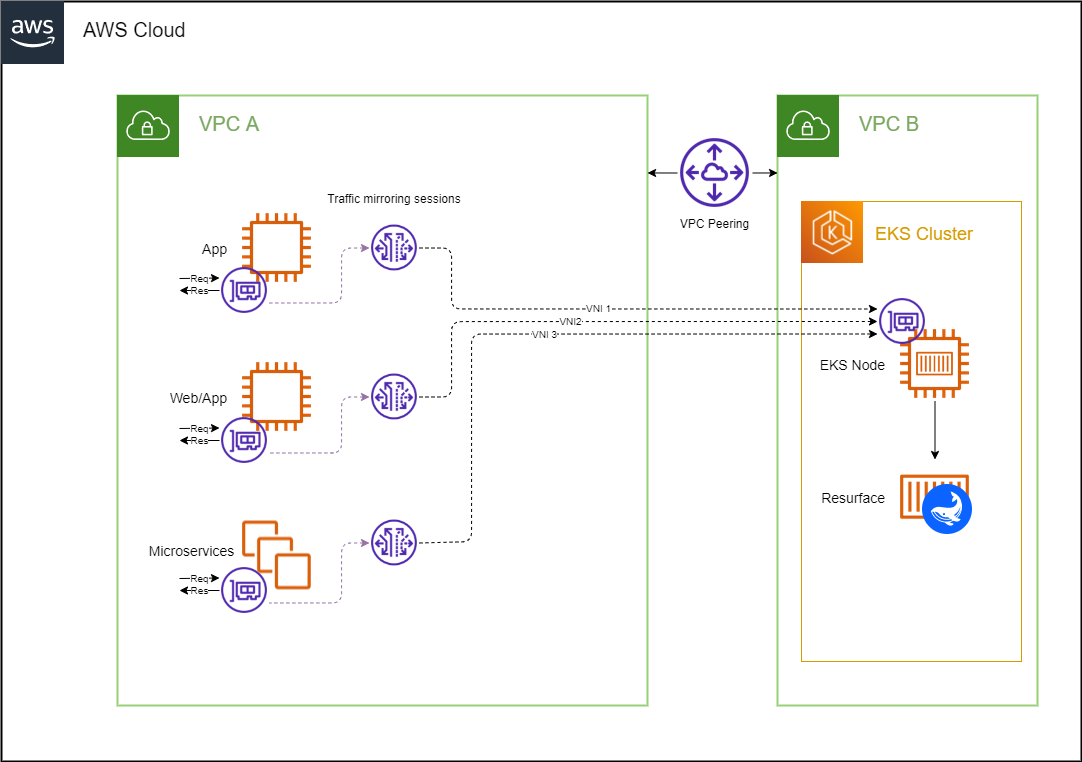

Then you can start capturing API calls to services running on Kubernetes, and other services running on AWS:

- Deploy a sniffer daemonset to automatically monitor APIs running inside your EKS clusters.

- Use VPC traffic mirroring to monitor APIs running on EC2, ECS or Fargate.

- Capture API calls fronted by AWS API Gateway using Kinesis integration.

- Use open-source logger libraries to capture API calls directly from your microservices.

Running on Azure

Resurface installs on any Kubernetes cluster with a single helm command, and uses optimized defaults when installing on Azure AKS.

Then you can start capturing API calls to services running on Kubernetes, and other services running on Azure:

- Deploy a sniffer daemonset to automatically monitor APIs running on your AKS clusters.

- Capture API calls fronted by Azure API Management (APIM) using Azure Event Hub integration.

- Use open-source logger libraries to capture API calls directly from your microservices.

Running on GCP

Resurface installs on any Kubernetes cluster with a single helm command, and uses optimized defaults when installing on Google GKE.

Then you can start capturing API calls to services running on Kubernetes, and other services running on GCP:

- Deploy a sniffer daemonset to automatically monitor APIs running on your GKE clusters.

- Use open-source logger libraries to capture API calls directly from your microservices.

Running on IBM Cloud

Resurface installs on any Kubernetes cluster with a single helm command, and uses optimized defaults when installing on IBM Cloud.

Then you can start capturing API calls to services running on Kubernetes, and other services running on IBM Cloud:

- Deploy a sniffer daemonset to automatically monitor APIs running on your Kubernetes clusters.

- Use open-source logger libraries to capture API calls directly from your microservices.

Running on Kubernetes

Resurface installs on any user-managed Kubernetes cluster (including microk8s), on 64-bit Intel and ARM chipsets.

Then you can start capturing API calls to services running on Kubernetes, and legacy systems on physical or virtualized infrastructure:

- Deploy a sniffer daemonset to automatically monitor APIs running on your Kubernetes clusters.

- Use open-source logger libraries to capture API calls directly from your microservices.

Installation

Getting Started

Installation

Using helm

Installing on AWS

Installing on Azure

Installing on GCP

Installing on IBM Cloud

Installing on microk8s

Installing on Kubernetes

Administration

Capturing API Calls

Adding Capacity

SQL Reference

JSON Format

Logging Rules

Troubleshooting

AWS Tutorials

✉️ Contact support

Resurface is Kubernetes-native software that is installed using helm. This section will provide all the installation commands to use for your target cloud platforms.

Using helm

Helm is the standard package manager for Kubernetes. Think apt or brew, but for your Kubernetes cluster.

After installing helm, you will be able to install and upgrade Kubernetes applications (called charts) onto your Kubernetes cluster. The main helm commands are shown below, and the rest of this documentation gives all the specific examples you'll need to administer your installation.

helm repo update # fetch latest chart versions from all repositories

helm repo add <...> # add a remote repository to use for installations

helm install <...> # install a specific chart onto your Kubernetes cluster

helm upgrade <...> # upgrade or reconfigure a specific chartAll of the examples below show installation into a dedicated namespace, so that these containers do not interfere with any others that are already deployed.

Installing on AWS

When installing Resurface on an existing EKS cluster, you'll need 6 vCPU and 18 GiB of memory for each Resurface node deployed. If these requirements cannot be met by your existing EKS cluster, create a new node group using m7g.2xlarge (ARM), m7i.2xlarge (x86), or larger VMs.

In addition, the Amazon EBS CSI Driver add-on must be enabled in your cluster in order to provision persistent volumes. The Amazon EBS CSI plugin requires IAM permissions to make calls to AWS APIs on your behalf, so be sure to create the corresponding IAM Role, or attach the AmazonEBSCSIDriverPolicy to your existing role.

Then install Resurface with helm, using optimized default options for AWS:

$ helm repo add resurfaceio https://resurfaceio.github.io/containers; helm repo update; helm install resurface resurfaceio/resurface --create-namespace --namespace resurface --set provider=awsFinally run this script to get your database URL, then paste into your browser to access your database:

$ echo http://$(kubectl get svc resurface-kubernetes-ingress --namespace resurface --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")/ui/Installing on Azure

When installing Resurface on an existing AKS cluster, you'll need 6 vCPU and 18 GiB of memory for each Resurface node deployed. If these requirements cannot be met by your existing AKS cluster, create a new node pool using Standard_D8ps_v5 (ARM), Standard_D8as_v5 (x86), or larger VMs.

Then install Resurface with helm, using optimized default options for Azure:

$ helm repo add resurfaceio https://resurfaceio.github.io/containers; helm repo update; helm install resurface resurfaceio/resurface --create-namespace --namespace resurface --set provider=azure --set kubernetes-ingress.controller.service.externalTrafficPolicy=LocalFinally run this script to get your database URL, then paste into your browser to access your database:

$ echo http://$(kubectl get svc resurface-kubernetes-ingress --namespace resurface --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")/ui/Installing on GCP

When installing Resurface on an existing GKE cluster, you'll need 6 vCPU and 18 GiB of memory for each Resurface node deployed. If these requirements cannot be met by your existing GKE cluster, create a new node pool using c3d-standard-8 (x86) or larger VMs. We do not recommend deploying on ARM at this time.

Then install Resurface with helm, using optimized default options for GCP:

$ helm repo add resurfaceio https://resurfaceio.github.io/containers; helm repo update; helm install resurface resurfaceio/resurface --create-namespace --namespace resurface --set provider=gcpFinally run this script to get your database URL, then paste into your browser to access your database:

$ echo http://$(kubectl get svc resurface-kubernetes-ingress --namespace resurface --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")/ui/Installing on IBM Cloud

When installing Resurface on Red Hat OpenShift on IBM Cloud, you'll need 6 vCPU and 18 GiB of memory for each Resurface node deployed. If these requirements cannot be met by your existing OpenShift cluster, create a node pool using bx2-8x32 (x86) or larger VMs. We do not recommend deploying on ARM at this time.

Create an OpenShift project:

$ oc new-project resurface --description="Resurface discovers and alerts on quality and security signatures in your API traffic" --display-name="Resurface"Then install Resurface with helm, using optimized default options:

$ helm install resurface resurfaceio/resurface --set provider=ibm-openshift --set ingress.controller.enabled=false --set ingress.tls.host=$(oc -n openshift-ingress-operator get ingresscontrollers.operator.openshift.io default -o jsonpath='{.status.domain}') --namespace resurfaceAdd the `anyuid` scc to the resurface service account:

$ oc adm policy add-scc-to-user anyuid -z resurface-saFinally run this script to get your database URL, then paste into your browser to access your database:

$ echo http://$(oc get route --namespace resurface --template "{{ (index .items 0).spec.host }}")/ui/Installing on microk8s

Microk8s is a lightweight Kubernetes distribution, which runs on your own hardware. To start, you'll need a Linux machine or VM with at least 8 vCPU and 24GB of memory. Each Resurface node requires 6 vCPU and 18 GB of memory, and there needs to be resources left over for microk8s, Minio (if enabled), and the operating system.

Microk8s requires snap, which is enabled by default on Ubuntu and its derivatives. For other Linux distributions, enable snap support before installing microk8s.

# install microk8s

sudo snap install microk8s --classic;

sudo usermod -a -G microk8s $USER;

newgrp microk8s;

alias helm='microk8s helm';

alias kubectl='microk8s kubectl';

# disable microk8s daemon-apiserver-kicker

echo "--bind-address 0.0.0.0" >> /var/snap/microk8s/current/args/kube-apiserver;

microk8s stop; microk8s start;

# configure microk8s

microk8s enable dns;

microk8s enable hostpath-storage;

microk8s status --wait-ready;

# bind machine IP address to microk8s

microk8s enable metallb:X.X.X.X-X.X.X.X;

# install Resurface

helm repo add resurfaceio https://resurfaceio.github.io/containers;

helm repo update;

helm install resurface resurfaceio/resurface --create-namespace --namespace resurface;Run this script to get your database URL, then paste into your browser to access your database:

$ echo http://$(kubectl get svc resurface-kubernetes-ingress --namespace resurface --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")/ui/Installing on Kubernetes

For AKS, EKS, GKE, OpenShift, and microk8s, it's recommended to use the instructions provided above. When installing Resurface on other types of Kubernetes clusters, including the single-node Kubernetes cluster bundled with Docker Desktop, you'll need 6 vCPU and 18 GiB of memory for each Resurface node deployed.

Install Resurface with helm, using generic default options:

$ helm repo add resurfaceio https://resurfaceio.github.io/containers; helm repo update; helm install resurface resurfaceio/resurface --create-namespace --namespace resurfaceRun this script to get your database URL, then paste into your browser to access your database:

$ echo http://$(kubectl get svc resurface-kubernetes-ingress --namespace resurface --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")/ui/Administration

This section covers all the helm and kubectl commands you'll need to administer your Resurface clusters, whether you are a helm guru already or using Kubernetes for the first time.

Configuring DNS

In order to enable TLS or authentication, you'll first need to assign a DNS name to your Resurface installation. We'll use the name MYRESURFACE in the examples here.

The MYRESURFACE name needs to resolve to the EXTERNAL-IP associated with your Resurface installation, as reported by this script:

$ kubectl get svc resurface-kubernetes-ingress -n resurfaceOnce you have created your DNS entry, you should be able to access your Resurface installation using this url:

Enabling TLS

Resurface bundles an ingress controller that is intended to be used for TLS termination. We strongly recommend configuring TLS so that data is encrypted over the network.

You can easily generate a TLS certificate-key pair for your Resurface installation, or you can use your own certificate if you have one already.

⚠️ DNS resolution must be configured before TLS can be enabled.

Cert-manager configurations

If you don't have a TLS certificate-key pair yet, Resurface makes it super easy to generate one. Resurface includes the cert-manager utility to automatically issue (and renew) a TLS certificate for your Resurface installation, using Let's Encrypt as your certificate authority.

First install cert-manager into your Resurface namespace:

$ helm repo add jetstack https://charts.jetstack.io; helm repo update; helm install cert-manager jetstack/cert-manager --namespace resurface --version v1.13.3 --set installCRDs=true --set prometheus.enabled=falseNow enable TLS for your Resurface installation, using cert-manager to manage your certificate:

$ helm upgrade -i resurface resurfaceio/resurface --namespace resurface --set ingress.tls.enabled=true --set ingress.tls.host=MYRESURFACE --set ingress.tls.autoissue.enabled=true --set ingress.tls.autoissue.staging=false --set ingress.tls.autoissue.email=MYEMAIL --reuse-valuesBring-your-own-certificate configurations

If you already have a TLS certificate-key pair, you can create a Kubernetes secret to store them like this:

$ kubectl create secret tls resurface-tls-secret -n resurface --cert=PATH/TO/CERT/FILE --key=PATH/TO/KEY/FILENow enable TLS for your Resurface installation, referencing the Kubernetes secret:

$ helm upgrade -i resurface resurfaceio/resurface --namespace resurface --set ingress.tls.enabled=true --set ingress.tls.host=MYRESURFACE --set ingress.tls.byoc.secretname=resurface-tls-secret --reuse-valuesEnabling authentication

⚠️ DNS resolution and TLS has to be configured before authentication can be enabled.

Basic authentication

Use the following command to enable basic authentication for a single user. (On some systems, you may have to add noglob to the start of the shell command)

$ helm upgrade -i resurface resurfaceio/resurface -n resurface --set auth.enabled=true --set auth.basic.enabled=true --set auth.basic.credentials[0].username=rob --set auth.basic.credentials[0].password=blah1234 --reuse-valuesTo enable basic authentication for multiple users, it's easier to create an auth.yaml file than to specify user names and passwords at the command line. Here's an example auth.yaml file that defines three users:

auth:

enabled: true

basic:

enabled: true

credentials:

- username: rob

password: blah1234

- username: jsmith

password: hunter2

- username: admin

password: irtRUqUp7fkfLReload the auth.yaml file whenever user names or passwords are changed:

$ helm upgrade -i resurface resurfaceio/resurface -n resurface -f auth.yaml --reuse-values⚠️ At this time, Resurface does not support password resets from the log in page. The only way to change user passwords is through helm.

OAuth authentication

auth:

enabled: true

oauth2:

enabled: true

issuer: https://accounts.google.com

authurl: https://accounts.google.com/o/oauth2/v2/auth

tokenurl: https://oauth2.googleapis.com/token

jwksurl: https://www.googleapis.com/oauth2/v3/certs

userinfourl: https://openidconnect.googleapis.com/v1/userinfo

clientid: sampleid123.apps.googleusercontent.com

clientsecret: samplesecret456Reload the auth.yaml file whenever OAuth configuration is changed:

$ helm upgrade -i resurface resurfaceio/resurface -n resurface -f auth.yaml --reuse-valuesJWT authentication

auth:

enabled: true

jwt:

enabled: true

jwksurl: << your JWKS URL>>Reload the auth.yaml file whenever JWT configuration is changed:

$ helm upgrade -i resurface resurfaceio/resurface -n resurface -f auth.yaml --reuse-valuesUsing multiple authentication methods

auth:

enabled: true

basic:

enabled: true

credentials:

- username: rob

password: blah1234

- username: jsmith

password: hunter2

- username: admin

password: irtRUqUp7fkfL

oauth2:

enabled: true

issuer: https://accounts.google.com

authurl: https://accounts.google.com/o/oauth2/v2/auth

tokenurl: https://oauth2.googleapis.com/token

jwksurl: https://www.googleapis.com/oauth2/v3/certs

userinfourl: https://openidconnect.googleapis.com/v1/userinfo

clientid: sampleid123.apps.googleusercontent.com

clientsecret: samplesecret456$ helm upgrade -i resurface resurfaceio/resurface -n resurface -f auth.yaml --reuse-valuesSetting timezone

Containers run with UTC timezone by default, but it's easy to set all containers to a specific timezone:

$ helm upgrade -i resurface resurfaceio/resurface -n resurface --set custom.config.tz="America/Denver" --reuse-valuesUpgrading to latest version

It's easy to upgrade a Resurface cluster to the latest release version. This makes sure that you have all the latest features, fixes and security patches. All data stored in your Resurface database will be available after the upgrade without any manual intervention.

$ helm repo update; helm upgrade -i resurface resurfaceio/resurface -n resurface --reuse-valuesUninstalling Resurface

Removing Resurface from your Kubernetes cluster is easy, and won't impact any other applications deployed using Helm. Removing the Resurface namespace with kubectl also removes all persistent volumes. Please note there is no way to reverse this once done.

$ helm uninstall resurface -n resurface; kubectl delete namespace resurfaceCapturing API Calls

Getting Started

Installation

Administration

Capturing API Calls

Getting capture URL

Submitting JSON

Sniffer DaemonSet

Sniffer sidecar

VPC traffic mirroring

Tyk API Gateway

AWS API Gateway

Azure API Management

Kong API Gateway

Logger libraries

Adding Capacity

SQL Reference

JSON Format

Logging Rules

Troubleshooting

AWS Tutorials

✉️ Contact support

Resurface captures API calls from network sniffers, API gateways, and API microservices, in any combination. This section covers all the options to capture API calls to your Resurface database.

Getting capture URL

Each Resurface cluster has a capture URL that is used to receive incoming API calls. This is different than the URL used to connect to the database. Run this script to get your capture URL:

$ echo http://$(kubectl get svc resurface-kubernetes-ingress --namespace resurface --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")/fluke/messageSubmitting JSON

Resurface accepts API calls in JSON format from practically any source, including curl. This makes for an easy "hello world" test to verify that your database is able to receive API calls over the network, before configuring any sniffers or other data sources.

Here's an example of using `curl` to capture a single API call:

$ curl -X POST -H "Content-type: application/json" --data '[["request_method","GET"],["request_url", "http://myurl"],["response_code","200"]]' Sniffer DaemonSet

Resurface can deploy a network sniffer to every node in your Kubernetes cluster using a DaemonSet. This allows API calls to be captured without having to modify each pod. Our sniffer discovery feature automatically captures all API traffic as services start and stop within the cluster.

The sniffer DaemonSet is disabled by default, but can be enabled with a simple helm command:

$ helm upgrade -i resurface resurfaceio/resurface --namespace resurface --set sniffer.enabled=true --set sniffer.discovery.enabled=true --reuse-valuesSniffer sidecar

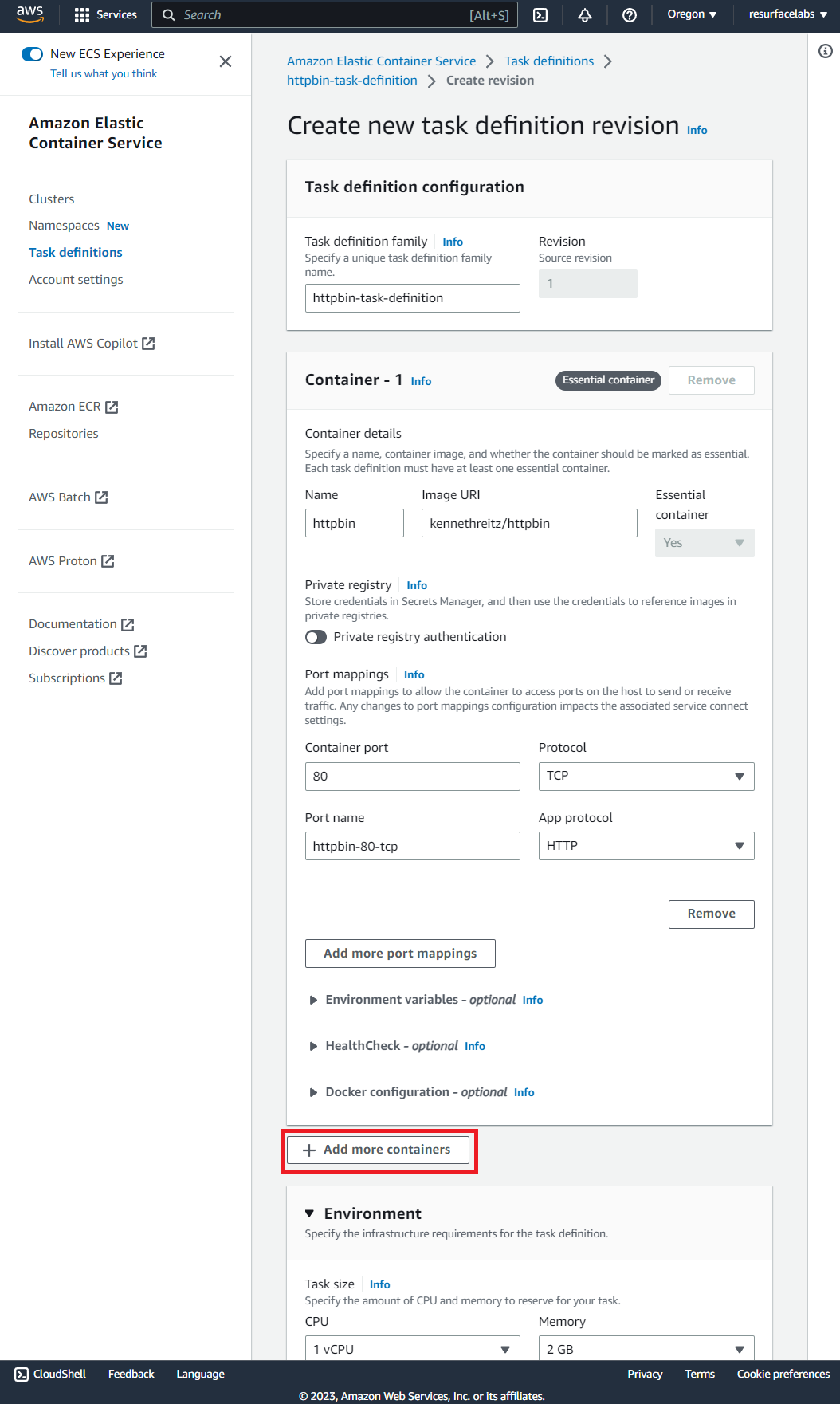

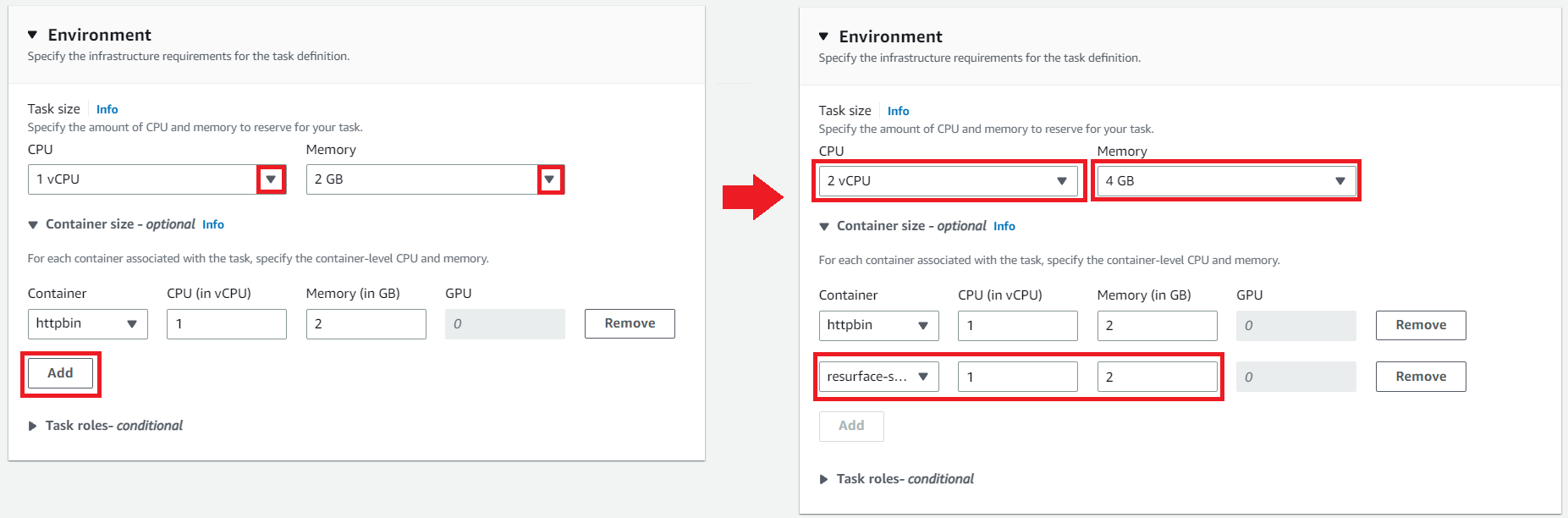

Resurface provides a containerized network-level packet-capture sniffer that can run alongside your own applications as a sidecar. This allows API calls to be captured directly from their shared network interface. Our sniffer sidecar works for AWS ECS, Azure ACI, Docker compose, stand-alone Kubernetes manifests and pretty much anywhere where you can run multi-container applications.

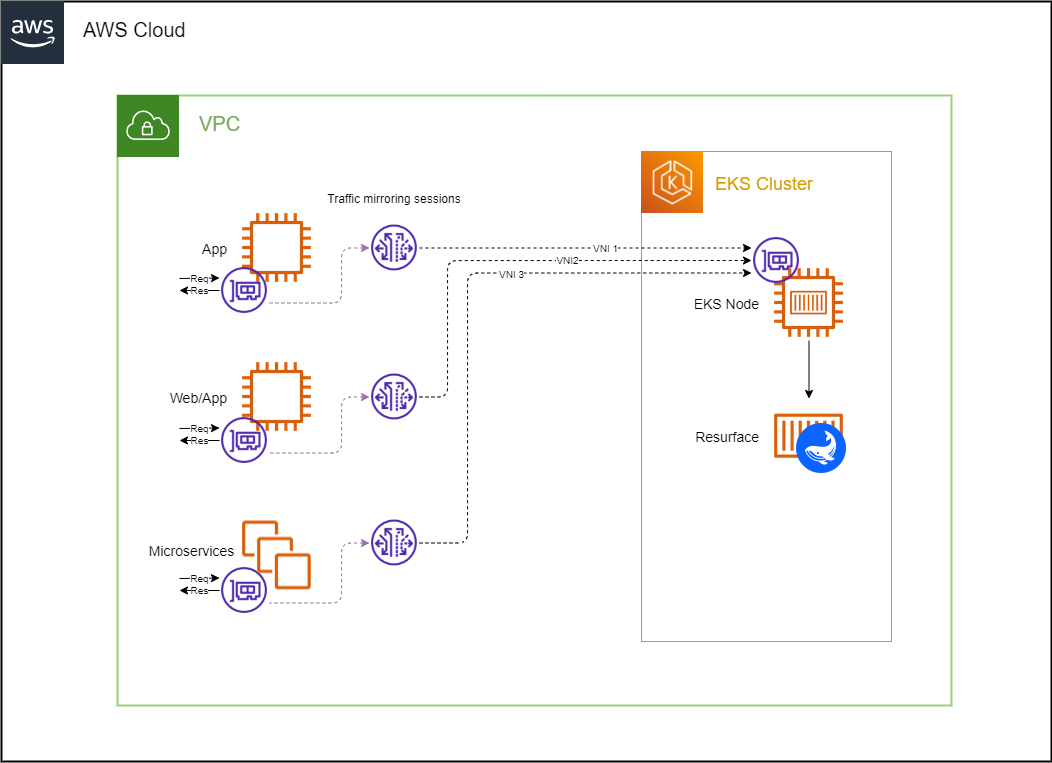

VPC traffic mirroring

Traffic mirroring (supported by Amazon VPC) copies network traffic from EC2 instances to monitoring platforms like Resurface. This allows a high volume of API traffic to be delivered to a Resurface network sniffer that captures the API calls. Traffic monitoring doesn't require changes to any existing APIs, and doesn't negatively impact API performance.

Tyk API Gateway

For APIs fronted by a Tyk gateway, API calls can be easily captured to Resurface through the Tyk pump. Using the Tyk pump does not slow down calls made through the Tyk gateway. All code related to Tyk pump integration is open-source (and packaged/distributed by Tyk), but is independently tested and supported by Resurface.

We're proud to be part of the Tyk community! Resurface Labs won a Tyk Community Award in 2021, and announced a formal partnership with Tyk in 2023.

AWS API Gateway

For APIs fronted by Amazon API Gateway, API calls can be captured to your Resurface database through Kinesis data streams. This doesn't require changes to any existing APIs, and doesn't negatively impact API performance. This open-source integration module is shared on GitHub under the Apache2 license, and is fully supported by Resurface.

Azure API Management

For APIs fronted by Azure API Management (APIM), API calls can be captured to your Resurface database through Event Hub integration. This doesn't require any changes to your existing APIs, just minor changes to your APIM configuration. This open-source integration module is shared on GitHub under the Apache2 license, and is fully supported by Resurface.

Kong API Gateway

For APIs fronted by a Kong gateway, API calls can be captured to Resurface just by adding a Kong plugin. Our open-source plugin is hosted on GitHub, shared under the Apache2 license, and is fully supported by Resurface.

Logger libraries

Our open-source logging libraries are easy to integrate, with friendly Apache2 licensing and minimal dependencies. Plus these include prebuilt middleware for many popular frameworks, so you can be logging API calls in just a few minutes.

Adding Capacity

Resurface scales from relatively small to really big, using the same helm commands. Start with a single node, or a small cluster, before turning on Iceberg integration and scaling to many terabytes of storage.

Capacity planning

Simply put, Resurface scales by adding nodes to a cluster. However Resurface is deployed or configured, you'll see an immediate linear improvement in capture and storage capacity with each node added.

Kinds of nodes: When first installed, a Resurface cluster contains a single node, called the coordinator node. The cluster can be expanded by adding one or more worker nodes. All nodes participate in capturing API calls and processing distributed queries. Only the coordinator node runs the Resurface UI and responds to SQL queries from external applications.

Sizes of nodes: Coordinator and worker nodes within a cluster are always the same size. CPU and memory quotas are enforced through Kubernetes. Larger quotas are typically enforced when Iceberg integration is enabled.

Capture capacity: Each node in a cluster can typically capture between 1k-10k calls/sec, depending on the size of your API calls and the speed of your virtual CPUs.

Storage capacity: Each node in a cluster has a local persistent volume that can typically store 1-10M calls, depending on the size and compressability of your API calls. When Iceberg integration is enabled, Resurface uses up to 100GB of S3/Minio storage per node deployed.

External services: Resurface does not depend on any Hadoop, Hive, RDS, Kafka, Spark, Zookeeper services or external databases. Resurface optionally requires S3 or Minio for storage when Iceberg integration is enabled. Resurface optionally integrates with existing authentication services as needed.

Supported chipsets: Resurface runs on 64-bit x86 and ARM CPUs, including Intel Xeon, AMD, Amazon Graviton and Apple Silicon. It is recommended (but not strictly required) that all nodes in a cluster use the same chipset.

Fault tolerance

Trino (and therefore Resurface) uses an eventual availability approach to fault tolerance. Resurface is designed to avoid permanently losing data when a single node fails, but some data or functionality may be temporarily unavailable when one or more nodes are down. This approach works well when the failure/partitioning of a node is relatively rare, when the recovery of a node is relatively fast, and when there is no appetite to deploy all the extra/duplicate infrastructure required to guarantee high availability. Trino does not currently support true HA deployments because the eventual availability model works so well, especially on Kubernetes where failed nodes will be automatically recovered.

Coordinator availability: If the coordinator node goes down, the Resurface UI will not be available, and the cluster will be unable to process any external queries until the coordinator node is recovered. Under normal circumstances, Kubernetes will restore a failed coordinator within a few minutes. Alerting and other integration features are only available while the coordinator node is running. Worker nodes will be able to capture API calls without interruption while the coordinator node is down or restarting.

Worker availability: If a worker node fails, all remaining nodes will continue to capture API calls without interruption. Data stored locally by the failed worker will be unavailable until the worker node is restarted, which is typically within a few minutes.

Iceberg availability: Any data stored on Iceberg is available as long as the coordinator node in a cluster is running, and S3/Minio are operating and reachable over the network. (Minio provides rich options for storage availability, which are managed separately from Resurface)

For individual queries: An individual query will fail if any node fails while the query is being executed. Trino has recently introduced new options for fault-tolerant execution of queries, but these aren't supported by Resurface yet.

Out-of-space handling: Resurface is designed to operate while retaining as much data as possible. The database will automatically drop the oldest API calls as new ones arrive. Resurface operates at >80% of its configured capacity without requiring any manual intervention. If an unexpected out-of-space condition is detected (typically due to a misconfiguration) then Resurface will reduce its storage settings accordingly and attempt to keep running.

Configuring workers

Within a few minutes of being created, new workers will automatically connect to the coordinator node and start capturing API calls.

Scale your Resurface database to a 3-node cluster:

$ helm upgrade -i resurface resurfaceio/resurface -n resurface --set multinode.enabled=true --set multinode.workers=2 --reuse-values⚠️ You can reduce the number of workers, but data stored by those workers will be lost when their persistent volumes are deleted.

Enabling Iceberg storage

Apache Iceberg is a popular open standard for storing huge tables, and is natively supported by Resurface and Trino.

Resurface is the only API security platform that offers seamless Iceberg integration. To the user or integrator, Resurface behaves exactly the same when Iceberg storage is enabled, but there will be a lot more data available for analysis.

Iceberg requires an external object store, which can be either Minio or Amazon S3. API calls are initially captured on Resurface nodes, and then this data is moved to Minio or S3 in the background automatically, without users being aware this is happening. Resurface manages this entire process by creating Iceberg tables and views, periodically migrating new data to Iceberg, deleting old data from Minio/S3 as new data arrives, and ensuring that queries never encounter duplicate or missing details as replication occurs. Any data that is already stored on the Resurface cluster will be preserved when Iceberg integration is enabled. All default and custom signatures (and all external SQL queries) will continue to work without any changes.

⚠️ Iceberg storage is enabled through a special license option. Please contact us if you'd like an evaluation license.

⚠️ Once enabled, Iceberg storage cannot be disabled without losing all data stored by the cluster.

Enable Iceberg integration for Minio:

$ helm upgrade resurface resurfaceio/resurface -n resurface --reuse-values --set iceberg.enabled=true --set minio.enabled=true --set minio.rootUser=YOUR_CUSTOM_MINIO_USERNAME --set minio.rootPassword=YOUR_CUSTOM_MINIO_PASSWORDOptionally enable Minio console:

$ helm upgrade resurface resurfaceio/resurface -n resurface --set ingress.minio.expose=true --reuse-valuesEnable Iceberg integration for S3, when running on AWS:

$ helm upgrade resurface resurfaceio/resurface -n resurface --reuse-values --set iceberg.enabled=true --set iceberg.s3.enabled=true --set iceberg.s3.bucketname=YOUR_AWS_S3_BUCKET_NAME --set iceberg.s3.aws.region=YOUR_AWS_S3_BUCKET_REGION --set iceberg.s3.aws.accesskey=YOUR_AWS_ACCESS_KEY_ID --set iceberg.s3.aws.secretkey=YOUR_AWS_SECRET_ACCESS_KEYSQL Reference

Getting Started

Installation

Administration

Capturing API Calls

Adding Capacity

SQL Reference

About Trino

Client connections

Generating SQL

Schemas and views

Column definitions

Bitmap columns

Bitmap collections

Custom views

Connector functions

JSON Format

Logging Rules

Troubleshooting

AWS Tutorials

✉️ Contact support

Resurface is powered by a distributed SQL database, with an opinionated schema that is purpose-built for API monitoring. This section will help you run any kind of SQL query, from simple counts to complex aggregations.

About Trino

Resurface is built on Trino, formerly known as PrestoSQL. This was originally developed at Facebook but is now an independent open-source project. Trino is a powerful distributed query engine that provides a common SQL interface to many popular databases, and has a small footprint compared with many big data platforms.

Resurface extends and optimizes Trino in a few key ways:

- The Resurface web interface runs alongside the Trino web UI.

- Resurface uses native Iceberg support provided by Trino.

- Resurface adds a hot-data storage engine for short-term capture and querying of API calls.

- Resurface seamlessly replicates data to Iceberg while providing uninterrupted query access to all API calls.

- Resurface adds custom scalar and aggregate functions to optimize queries on API calls.

- Resurface inherits all Trino security features for TLS, user authentication, and user permissions.

We're happy to support and contribute back to the Trino community! 🐰

Rob hangs with the Trino team to dish about custom storage engines and API use-cases.

Client connections

There are several ways to connect to Resurface and run SQL queries, depending on your requirements.

Each connection method supports the same SQL dialect, and the same TLS and user authentication options to protect your data.

Using JSON API

This is the easiest way to submit external queries but has some key limitations.

Benefits:

- REST interface requiring no client libraries

- Accepts a single SQL statement (from POST data)

- Supports complex statements including

WITHandUNION ALL - Supports multiple statements (encoded as array)

- Returns query results as JSON document

Limitations:

- Only string and number types supported

- Not suitable for huge result sets

- Simple error handling (empty document on failure)

Query when no authentication is configured:

curl -X POST --user 'rob:' --data 'select count(*) as total from resurface.data.messages' http://localhost/ui/api/resurface/runsqlQuery with basic authentication:

curl -X POST --user 'rob:blah1234' --data 'select count(*) as total from resurface.data.messages' https://localhost/ui/api/resurface/runsqlIn the previous two examples, curl converts the user parameter into a valid Authorization header. If you aren't using curl, you'll have to calculate

the Authorization header by appending username:password and applying base64 encoding.

Query with Authorization header:

curl -X POST -H "Authorization: cm9iOmJsYWgxMjM0" --data 'select count(*) as total from resurface.data.messages' https://localhost/ui/api/resurface/runsqlUsing Trino client libraries

This is the most flexible way to submit external queries but requires more work to integrate.

Benefits:

- All client libraries are free and open-source

- Exposes native types for numbers, dates, arrays, and maps

- Easier iterating through very large datasets

- Custom error handling supported

Limitations:

- Requires Trino JDBC, ODBC, Java, Python, Node, or R library

- No automatic conversion to JSON

- More difficult to integrate

Trino documentation provides a simple JDBC example to follow.

Start with a count query:

select count(*) as total from resurface.data.messagesUsing common database tools

Trino works with DBeaver, dbt, DataGrip, Metabase, Tableau, Looker, Superset, and many other database & ETL tools. Some of these tools are preconfigured with Trino client libraries, but it's recommended to use the library version that matches the bundled Trino version.

Start with a count query:

select count(*) as total from resurface.data.messagesGenerating SQL

Now that you can connect and execute a basic count query, the obvious question is how to build SQL statements for more interesting cases.

While there are lots of examples shown in this documentation, Resurface makes it easy to copy SQL statements for any data shown the web interface. This is

typically easier than writing SQL by hand, especially since any relevant WHERE and GROUP BY clauses will be generated for you.

You'll find this Copy SQL function in the Share menu, and in the Copy button displayed in most charts. You can then paste this SQL into your client

or editor of choice.

Schemas and views

All of the data managed by Resurface is available through SQL queries, including request/response data, signature definitions, summary views, and settings. The database is organized into different schemas, where each schema acts as a separate namespace.

Examples in this documentation use fully qualified names: resurface.<schema-name>.<view-name>

All fully qualified names start with resurface because this refers to the Resurface connector for Trino. This connector manages all Resurface

schemas and their views, including views that merge data across Resurface and Iceberg connectors.

System views

The resurface.system schema is used for summaries that are automatically updated as new data arrives. With its fast cache for per-day and

per-signature summaries, resurface.system is the best starting point for multi-day reporting.

The resurface.system.summary view provides a multi-dimensional summary for each calendar day.

The resurface.volatile.sparklines view provides a sparkline summary for each configured signature.

Settings views

Configuration settings are stored in the resurface.settings schema.

Settings should only be modified using the web interface, but can be read by SQL queries.

Get all signature definitions:

select * from resurface.settings.view_catalog order by table_nameGet definition for a single signature:

select * from resurface.settings.view_catalog where table_name = 'completed_attacks'Data views

The single most important view is resurface.data.messages, which returns all API calls and has predefined columns for all request and response details.

This view merges data across Resurface and Iceberg catalogs, so that clients have a single unified view across all available data, even while data

is being replicated. This is also the base view for all signature views.

Get count of all API calls captured so far:

select count(*) from resurface.data.messagesGet all request and response details with limit:

select * from resurface.data.messages limit 100Get all details with offset & limit:

select * from resurface.data.messages offset 50 limit 100Select statements can use WHERE, HAVING, ORDER BY and WITH clauses as supported by Trino.

Get count of all GET requests:

select count(*) from resurface.data.messages where request_method = 'GET'Group by request method:

select request_method, count(*) from resurface.data.messages group by request_methodGroup by request method, using histogram function:

select histogram(request_method) from resurface.data.messagesSignature views

Each signature is available as a SQL view in the resurface.runtime schema.

Get count of completed attacks:

select count(*) from resurface.runtime.completed_attacksSignature views have the same predefined columns as resurface.data.messages for all request and response details.

Get all details for first 50 completed attacks:

select * from resurface.runtime.completed_attacks limit 50Get details for first 50 completed attacks with 'GET' request method:

select * from resurface.runtime.completed_attacks where request_method = 'GET' limit 50Index views

The resurface.data.messages_index view is significantly faster than resurface.data.messages for most types of queries.

But resurface.data.messages_index does not include a few specific (and typically large) columns:

graphql_queryrequest_bodyrequest_headersresponse_bodyresponse_headers

If your query doesn't touch any of the columns above, it's usually much faster to use the indexed variation.

Get count of all rows, with index:

select count(*) from resurface.data.messages_indexGet count of all GET requests, with index:

select count(*) from resurface.data.messages_index where request_method = 'GET'Indexes are supported for signatures as well, with one important limitation. An index will be created in the resurface.runtime_indexes schema only if the

signature does not reference any very large columns.

Get count of attacks, with index:

select count(*) from resurface.runtime_indexes.completed_attacks⚠️ Resurface does not automatically rewrite queries from external clients to use indexes when available. The only way to use an index is to explicitly

reference the resurface.data.messages_index view or the resurface.runtime_indexes schema as shown in the examples above.

Column definitions

These columns for request and response details are common across resurface.data.messages, signature views, and their indexes.

request_address

request_body

request_content_type

request_headers

request_json_type

request_method

request_method_safe

request_params

request_path

request_path_safe

request_port

request_protocol

request_query

request_url

request_user_agent

size_host_bytes

size_request_bytes

size_request_body_bytes

size_request_headers_bytes

size_request_params_bytes

size_request_url_bytes

response_body

response_code

response_code_int

response_content_type

response_date

response_date_and_hour

response_date_and_min

response_day_of_month

response_day_of_week

response_headers

response_hour_of_day

response_json_type

response_status

response_time

response_time_millis

size_response_bytes

size_response_body_bytes

size_response_headers_bytes

size_total_bytes

agent_category

The general category of agent used to make the API request. Calculated from request_user_agent, which may be spoofed by attackers.

Type: varchar

Indexed: yes

Example values:

Browser, Robot, Mobile App, Hacker, Cloud, UnknownSearch by value:

select count(*)

from resurface.data.messages_index

where agent_category = 'Robot'Summarize by value:

select agent_category, count(*) as count

from resurface.data.messages_index

group by agent_category order by count descagent_device

The type of hardware used to make the API request. Calculated from request_user_agent, which may be spoofed by attackers.

Type: varchar

Indexed: yes

Example values:

Desktop, Robot, Unknown, Robot Mobile, Phone, Hacker, Table, Mobile, Watch, TVSearch by value:

select count(*)

from resurface.data.messages_index

where agent_device = 'Robot'Summarize by value:

select agent_device, count(*) as count

from resurface.data.messages_index

group by agent_device order by count descagent_name

The name of the software program used to make the API request. Calculated from request_user_agent, which may be spoofed by attackers.

Type: varchar

Indexed: yes

Example values:

Chrome, AWS Security Scanner, Firefox, Zgrab, Safari, Googlebot, Masscan, BingbotSearch by value:

select count(*)

from resurface.data.messages_index

where agent_name = 'Chrome'Summarize by top 100 values:

select agent_name, count(*) as count

from resurface.data.messages_index

group by agent_name order by count desc limit 100apikey

Type: varchar

Indexed: yes

cookies

Type: varchar

Indexed: yes

cookies_count

Type: varchar

Indexed: yes

custom_fields

Special fields provided by loggers to capture additional details about the API call, user, or environment. These fields are not part of the original request or response, and cannot be filtered with logging rules.

Type: varchar

Indexed: yes

domain

Summarizes host into supported and unsupported DNS names, to aid with API discovery and drift detection. Detection of monitored, deprecated, and

prohibited domains is controlled through user settings. DNS names are flattened to a maximum of three segments (a.b.c) for better grouping.

Type: varchar

Indexed: yes

Example values:

api.resurface.io monitored domain

(Rogue) uncategorized calls

(Prohibited) unsupported or out-of-policy calls

(Deprecated) calls to legacy APIs

(IP address) no DNS name present

(Missing) no host present

(Malformed) host is present but not parseableSearch by value:

select count(*)

from resurface.data.messages_index

where domain = '(Rogue)'Summarize by top 100 values:

select domain, count(*) as count

from resurface.data.messages_index

group by domain order by count desc limit 100graphql_operation_name

The name of the operation actually invoked for a single GraphQL operation. A GraphQL query can declare multiple operation names but only one of these

is invoked per call. Only present when graphql_operations_count is equal to one, and null in other cases.

Type: varchar

Indexed: yes

Example value:

HeroNameAndFriendsSearch by value:

select count(*)

from resurface.data.messages_index

where graphql_operation_name = 'IntrospectionQuery'Summarize by top 100 values:

select graphql_operation_name, count(*) as count

from resurface.data.messages_index

group by graphql_operation_name order by count desc limit 100graphql_operation_type

The type of the operation actually invoked for a single GraphQL operation. A GraphQL query can declare multiple operations but only one of these is

invoked per call. Only present when graphql_operations_count is equal to one, and null in other cases.

Type: varchar

Indexed: yes

Allowed values:

QUERY, MUTATION, SUBSCRIPTIONSearch by value:

select count(*)

from resurface.data.messages_index

where graphql_operation_type = 'MUTATION'Summarize by value:

select graphql_operation_type, count(*) as count

from resurface.data.messages_index

group by graphql_operation_type order by count descgraphql_operations

Parsed details about any GraphQL operations detected in this API call. Each operation is indexed as a JSON object for the name and type of the operation invoked. Null if the request can't be parsed as a valid GraphQL operation.

Type: varchar

Indexed: yes

Example value:

[{"index":1,"type":"QUERY","name":"AllTheNews"},{"index":2,"type":"MUTATION","name":null},{"index":3,"type":"QUERY","name":"LatestNews"}]Count all mutation operations, whether in batches or single operations:

select sum(regexp_count(graphql_operations, '"MUTATION"'))

from resurface.data.messages_indexCount API calls with at least one mutation:

select count(*)

from resurface.data.messages_index

where strpos(graphql_operations, '"MUTATION"') > 0graphql_operations_count

The size of the graphql_operations collection. Zero when no GraphQL operations are detected. More than one for batched GraphQL operations.

Type: integer

Indexed: yes

Count batches:

select count(*)

from resurface.data.messages_index

where graphql_operations_count > 1Count total number of operations:

select sum(graphql_operations_count)

from resurface.data.messages_indexgraphql_query

The GraphQL query string for a single GraphQL operation. Only present when graphql_operations_count is equal to one, and null in other cases.

Type: varchar

Indexed: no

Example value:

{ hero { name } }Search for exact string match: (fastest but case-sensitive)

select count(*)

from resurface.data.messages

where strpos(graphql_query, 'createUser') > 0Search with like match: (slower but more expressive)

select count(*)

from resurface.data.messages

where graphql_query like '%createUser%'Search with regular expression: (slowest but most powerful)

select count(*)

from resurface.data.messages

where regexp_like(graphql_query, '(?i)createUser')graphql_variables

Optional GraphQL variables passed for a single GraphQL operation. Only present when graphql_operations_count is equal to one, and null in other cases.

Type: varchar

Indexed: yes

host

The host portion of request_url as seen by the API. May be a DNS name or IP address.

Type: varchar

Indexed: yes

Example values:

api.resurface.io

192.168.168.24Search by value:

select count(*)

from resurface.data.messages_index

where host like '%resurface.io'Summarize by top 100 values:

select host, count(*) as count

from resurface.data.messages_index

group by host order by count desc limit 100id

UUID string generated for each message received. This UUID was not present in the original request or response, but can be used to uniquely identify a record in the database.

Type: varchar

Indexed: yes

Example value:

0b94e74c-ecb7-4fa6-98e6-11ab9955eac1Load payload columns for one message id:

select request_body, response_body

from resurface.data.messages

where id = '0b94e74c-ecb7-4fa6-98e6-11ab9955eac1'Load payload columns for multiple message ids:

select request_body, response_body

from resurface.data.messages

where id in ('0b94e74c-ecb7-4fa6-98e6-11ab9955eac1', ...)interval_category

Summarizes performance from the user perspective. Calculated from interval_millis.

Type: varchar

Indexed: yes

Allowed values:

Satisfied, Tolerating, Frustrated, UnknownSearch by value:

select count(*)

from resurface.data.messages_index

where interval_category = 'Satisfied'Summarize by value:

select interval_category, count(*) as count

from resurface.data.messages_index

group by interval_category order by count descinterval_clique

The grouping used to build response time distributions. Calculated from interval_millis.

Type: varchar

Indexed: yes

Allowed values:

1..250 ms

250..500 ms

500..750 ms

750..1000 ms

1..2 sec

2..3 sec

3..4 sec

4..5 sec

5..6 sec

6..7 sec

7..8 sec

8..9 sec

9..10 sec

10..15 sec

15..20 sec

20..30 sec

Timeout

UnknownSearch by value:

select count(*)

from resurface.data.messages_index

where interval_clique = '500..750 ms'Summarize by value:

select interval_clique, count(*) as count

from resurface.data.messages_index

group by interval_clique order by count descinterval_millis

Elapsed milliseconds between the arrival of the request at the API and the completion of the response.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where interval_millis > 5000request_address

IP address extracted from one of the request headers below, or null if none of these are present:

- cf-connecting-ip

- fastly-client-ip

- forwarded

- forwarded-for

- true-client-ip

- x-forwarded-for

Type: varchar

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where request_address = '86.190.33.236'Summarize by top 100 values:

select request_address, count(*) as total

from resurface.data.messages_index

group by request_address

order by total desc

limit 100request_body

Original request payload as seen by the API. Automatically decompressed and decoded to a UTF-8 string.

Type: varchar

Indexed: no

Example value:

{"query":"query{\n allNews{\n id\n title\n body\n }\n}"}Search for exact string match: (fastest but case-sensitive)

select count(*)

from resurface.data.messages

where strpos(request_body, 'allNews') > 0Search with like match: (slower but more expressive)

select count(*)

from resurface.data.messages

where request_body like '%allNews%'Search with regular expression: (slowest but most powerful)

select count(*)

from resurface.data.messages

where regexp_like(request_body, '(?i)allnews')request_content_type

Value of the “Content-Type” request header, which indicates the type of body content. https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Content-Type

Type: varchar

Indexed: yes

Example value:

application/json; charset=UTF-8Search by value:

select count(*)

from resurface.data.messages_index

where request_content_type like 'application/json%'Summarize by value:

select request_content_type, count(*) as count

from resurface.data.messages_index

group by request_content_type order by count descrequest_headers

Original request headers as seen by the API. This is a list because the same header name can appear more than once and be associated with more than one value. (like "Cookie" headers)

This list will not include these request headers that are mapped to specific columns:

- "User-Agent" header is mapped to

request_user_agent - "Content-Type" header is mapped to

request_content_type - "X-Forwarded-For" and equivalent headers are mapped to

request_address

Type: varchar

Indexed: no

Example value:

[["content-length","0"],["x-amzn-trace-id","Root=1-5d8195a66013d475f0b19d"],["x-forwarded-port","80"],["x-forwarded-proto","http"]]Search where header is present:

select count(*) as count

from resurface.data.messages

where request_headers like '%["x-forwarded-port"%'Search where header value is present:

select count(*) as count

from resurface.data.messages

where request_headers like '%["x-forwarded-port","80%'request_json_type

Calculated by attempting to parse JSON request payloads when request_content_type indicates JSON.

Type: varchar

Indexed: yes

Allowed values:

null request_content_type not like 'application/json%'

OBJECT successfully parsed as JSON object

ARRAY successfully parsed as JSON array

SCALAR successfully parsed as JSON scalar value

MALFORMED JSON parsing failed because of a syntax errorSearch by value:

select count(*)

from resurface.data.messages_index

where request_json_type = 'OBJECT'Summarize by value:

select request_json_type, count(*) as count

from resurface.data.messages_index

group by request_json_type order by count descrequest_method

The type of operation made by the original API request.

Type: varchar

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where request_method = 'GET'Summarize by value:

select request_method, count(*) as count

from resurface.data.messages_index

group by request_method order by count descrequest_method_safe

Similar to request_method, but with nonstandard values replaced with "(Invalid)". Attackers may use methods with unexpected values or illegal characters,

and request_method_safe filters these out so the resulting values are safe to display in summary charts.

Type: varchar

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where request_method_safe = '(Invalid)'Summarize by value:

select request_method_safe, count(*) as count

from resurface.data.messages_index

group by request_method_safe order by count descrequest_params

Original request parameters as seen by the API. This is a list because the same param name can be associated with more than one value.

Type: varchar

Indexed: yes

Example value:

[["a","fetch"],["content","die(@md5(HelloThinkCMF))"]]Search where param is present:

select count(*) as count

from resurface.data.messages_index

where request_params like '%["content"%'Search where header value is present:

select count(*) as count

from resurface.data.messages_index

where request_params like '%["content","%'request_path

Parsed from request_url, this is the path to the resource referenced by the original API request. Always begins with a forward slash but may

include multiple slashes.

Type: varchar

Indexed: yes

Example value:

/blog/latest/new.jsonSearch by value:

select count(*)

from resurface.data.messages_index

where request_path = '/graphql'Summarize by top 500 values:

select request_path, count(*) as count

from resurface.data.messages_index

group by request_path order by count desc

limit 500request_path_safe

Similar to request_path, but with nonstandard values replaced with "(Invalid)". Attackers may use paths with illegal characters, and request_path_safe

filters these out so the resulting values are safe to display in summary charts.

Type: varchar

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where request_path_safe = '/graphql'Summarize by top 500 values:

select request_path_safe, count(*) as count

from resurface.data.messages_index

group by request_path_safe order by count desc

limit 500request_port

The network port used to make the original API request. Parsed from request_url.

For http traffic, a null value should be interpreted as port 80.

For https traffic, a null value should be interpreted as port 443.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where request_port = 8080Summarize by value:

select request_port, count(*) as count

from resurface.data.messages_index

group by request_port order by count descrequest_protocol

The network protocol used to make the original API request. Parsed from request_url.

Type: varchar

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where request_protocol = 'http'Summarize by value:

select request_protocol, count(*) as count

from resurface.data.messages_index

group by request_protocol order by count descrequest_query

The portion of request_url after the first ? character. For many apps this is a highly variable portion of the URL, since this is where any encoded

parameters will appear.

Type: varchar

Indexed: yes

Example value:

token=123&gclid=4625253Search by value:

select count(*)

from resurface.data.messages_index

where request_query like '%token=123%'Summarize by top 500 values:

select request_query, count(*) as count

from resurface.data.messages_index

group by request_query order by count desc

limit 500request_url

The complete URL from the original API request — including protocol (usually http or https), port (optional), path, and query string (after the first ? character).

Type: varchar

Indexed: yes

Example value:

https://resurface.io/api/info?token=123Search by value:

select count(*)

from resurface.data.messages_index

where request_url like '%/api/%'Summarize by top 500 values:

select request_url, count(*) as count

from resurface.data.messages_index

group by request_url order by count desc

limit 500request_user_agent

Value of the “User-Agent” request header, which indicates the type of agent making the request. https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/User-Agent

Type: varchar

Indexed: yes

Example value:

curl/7.64.1Search by value:

select count(*)

from resurface.data.messages_index

where request_user_agent like 'curl/%'Summarize by top 500 values:

select request_user_agent, count(*) as count

from resurface.data.messages_index

group by request_user_agent order by count desc

limit 500response_body

Original response payload as seen by the API. Automatically decompressed and decoded to a UTF-8 string.

Type: varchar

Indexed: no

Example value:

{ "version": "5.2.2" }Search for exact string match: (fastest but case-sensitive)

select count(*)

from resurface.data.messages

where strpos(response_body, url_encode('certs')) > 0Search with like match: (slower but more expressive)

select count(*)

from resurface.data.messages

where response_body like '%certs%'Search by regular expression: (slowest but most powerful)

select count(*)

from resurface.data.messages

where regexp_like(response_body, '(?i)certs')response_code

Status code returned as part of the response: https://developer.mozilla.org/en-US/docs/Web/HTTP/Status

Type: varchar

Indexed: yes

Example value:

200Search by value:

select count(*)

from resurface.data.messages_index

where response_code = '404'Summarize by value:

select response_code, count(*) as count

from resurface.data.messages_index

group by response_code order by count descresponse_code_int

Same as response_code but cast to an integer, or null if not a valid integer.

Type: integer

Indexed: yes

Example value:

200Search by value:

select count(*)

from resurface.data.messages_index

where response_code_int = 404Summarize by value:

select response_code_int, count(*) as count

from resurface.data.messages_index

group by response_code_int order by count descresponse_content_type

Value of the “Content-Type” response header, which indicates the type of body content. https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Content-Type

Type: varchar

Indexed: yes

Example value:

application/json; charset=UTF-8Search by value:

select count(*)

from resurface.data.messages_index

where response_content_type like 'application/json%'Summarize by value:

select response_content_type, count(*) as count

from resurface.data.messages_index

group by response_content_type order by count descresponse_date

The date when the response was generated. Calculated from response_time_mills.

Type: date

Indexed: yes

Example value:

2023-12-01Search using interval function:

select count(*)

from resurface.data.messages_index

where response_date > (current_date - interval '90' day)Search using value comparison:

select count(*)

from resurface.data.messages_index

where response_date > date('2023-12-01')response_date_and_hour

The date and hour of day (between 0 and 23) when the API response was generated. Calculated from response_time_millis.

Used to calculate per-hour summaries across multiple days.

Type: varchar

Indexed: yes

Example value:

2023-12-01:22Search by value:

select count(*)

from resurface.data.messages_index

where response_date_and_hour = '2023-12-01:22'Summarize by value over the last 5 days:

select response_date_and_hour, count(*) as count

from resurface.data.messages_index

where response_date > (current_date - interval '5' day)

group by response_date_and_hour

order by response_date_and_hourresponse_date_and_min

The date, hour of day (between 0 and 23), and minute of hour (between 0 and 59) when the API response was generated. Calculated from response_time_millis.

Used to calculate per-minute summaries across multiple hours.

Type: varchar

Indexed: yes

Example value:

2023-12-01:22:53Search by value:

select count(*)

from resurface.data.messages_index

where response_date_and_min = '2023-12-01:22:53'Summarize by value over the last 6 hours:

select response_date_and_min, count(*) as count

from resurface.data.messages_index

where response_time > (current_timestamp - interval '6' hour)

group by response_date_and_min

order by response_date_and_minresponse_day_of_month

The day of the month (between 1 and 31) when the API response was generated. Calculated from response_time_millis.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where response_day_of_month = 24Summarize by value:

select response_day_of_month, count(*) as count

from resurface.data.messages_index

group by response_day_of_month order by response_day_of_monthresponse_day_of_week

The day of the week (between 1 and 7) when the API response was generated. Calculated from response_time_millis.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where response_day_of_week = 4Summarize by value:

select response_day_of_week, count(*) as count

from resurface.data.messages_index

group by response_day_of_week order by response_day_of_weekresponse_headers

Original response headers as seen by the API. This is a list because the same header name can be associated with more than one value. (like "Set-Cookie" headers)

This list will not include request headers like "Content-Type" (which is mapped to response_content_type).

Type: varchar

Indexed: no

Example value:

[["connection","Keep-Alive"],["etag","c561c68d0ba92bbe"],["server","Apache"]]Search where header is present:

select count(*) as count

from resurface.data.messages

where response_headers like '%["etag"%'Search where header value is present:

select count(*) as count

from resurface.data.messages

where response_headers like '%["etag","c561c68d0ba92bbe"%'response_hour_of_day

The hour of day (between 0 and 23) when the API response was generated. Calculated from response_time_millis.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where response_hour_of_day = 8Summarize by value:

select response_hour_of_day, count(*) as count

from resurface.data.messages_index

group by response_hour_of_day order by response_hour_of_dayresponse_json_type

Calculated by attempting to parse JSON response payloads when present.

Type: varchar

Indexed: yes

Allowed values:

null response_content_type not like 'application/json%'

OBJECT successfully parsed as JSON object

ARRAY successfully parsed as JSON array

SCALAR successfully parsed as JSON scalar value

MALFORMED JSON parsing failed because of a syntax errorSearch by value:

select count(*)

from resurface.data.messages_index

where response_json_type = 'OBJECT'Summarize by value:

select response_json_type, count(*) as count

from resurface.data.messages_index

group by response_json_type order by count descresponse_status

Summarizes the success or failure of the API response. Calculated based on analysis of the entire response.

Categorizes cases where the response_code is 200 (meaning 'OK') but the response is really not OK.

Type: varchar

Indexed: yes

Allowed values:

Leaking data leak detected in response headers or body

Malformed JSON response is not parseable

Redirected response code is 3XX range

Unauthorized response code is 401

Forbidden response code is 403

Throttled response code is 429

Client Error response code in 4XX range, except for conditions above

Server Error response code in 5XX range, except for conditions above

JSON Error error object detected in JSON response

Completed response code is 200 and no other problems notedSearch by value:

select count(*)

from resurface.data.messages_index

where response_status = 'Leaking'Summarize by value:

select response_status, count(*) as count

from resurface.data.messages_index

group by response_status order by count descresponse_time

A native timestamp for when the API response was generated. Calculated from response_time_millis.

Type: timestamp(3) with time zone

Indexed: yes

Example value:

2023-12-01 00:02:44.0Search using interval function:

select count(*)

from resurface.data.messages_index

where response_time > (current_timestamp - interval '90' minute)Search using value comparator:

select count(*)

from resurface.data.messages_index

where response_time > (timestamp '2023-12-01 00:02:44.0')response_time_millis

Milliseconds since UNIX epoch (Jan 1 1970).

Type: bigint

Indexed: yes

Example value:

1604475303099Search using value comparator:

select count(*)

from resurface.data.messages_index

where response_time_millis > 1604475303099risk_category

Summarizes risk associated with the API request and response. Calculated from risk_score.

Type: varchar

Indexed: yes

Allowed values:

High, Medium, LowSearch by value:

select count(*)

from resurface.data.messages_index

where risk_category = 'High'Summarize by value:

select risk_category, count(*) as count

from resurface.data.messages_index

group by risk_category order by count descrisk_score

Numeric score for the risk associated with the API request and response. Calculated from all available request and response details.

Type: double

Indexed: yes

Example value:

1.25Search by value:

select count(*)

from resurface.data.messages_index

where risk_score > 1Get average value:

select avg(risk_score)

from resurface.data.messages_indexsession_fields

Fields copied by loggers from the user session active when the response is generated. These fields are not captured by default but can be enabled/filtered using logging rules.

Type: varchar

Indexed: yes

shard_file

Internal partition ID used for replication to Iceberg. Not intended for use by client applications.

Type: varchar

Indexed: yes

size_category

Summarizes approximate size of the request and response as seen by the API. Calculated from size_total_bytes.

Type: varchar

Indexed: yes

Allowed values:

Empty, Tiny, Small, Typical, Large, ExcessiveSearch by value:

select count(*)

from resurface.data.messages_index

where size_category = 'Tiny'Summarize by value:

select size_category, count(*) as count

from resurface.data.messages_index

group by size_category order by count descsize_host_bytes

Size in bytes of the host portion of request_url as seen by the API.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_host_bytes > 64size_request_bytes

Approximate size in bytes of the entire request — including headers, params and body, as seen by the API. All string content will be decoded & decompressed, and so this size may be significantly larger than the amount of data actually received over the network.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_request_bytes > 4096size_request_body_bytes

Approximate size in bytes of request_body, after decoding/decompressing strings.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_request_body_bytes > 4096size_request_headers_bytes

Approximate size in bytes of request_headers, after decoding/decompressing strings. Includes JSON padding not present in original request.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_request_headers_bytes > 4096size_request_params_bytes

Approximate size in bytes of request_params, after decoding/decompressing strings. Includes JSON padding not present in original request.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_request_params_bytes > 4096size_request_url_bytes

Size in bytes of request_url as seen by the API.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_request_url_bytes > 4096size_response_bytes

Approximate size in bytes of the entire response — including headers, params and body, as seen by the API. All string content will be decoded & decompressed, and so this size may be significantly larger than the amount of data actually returned over the network.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_response_bytes > 4096size_response_body_bytes

Approximate size in bytes of response_body, after decoding/decompressing strings.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_response_body_bytes > 4096size_response_headers_bytes

Approximate size in bytes of response_headers, after decoding/decompressing strings. Includes JSON padding not present in original response.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_response_headers_bytes > 4096size_total_bytes

Approximate size in bytes of the request and response combined, as seen by the API. All string content will be decoded & decompressed, and so this size may be significantly larger than the amount of data actually received and returned over the network.

Type: int

Indexed: yes

Search by value:

select count(*)

from resurface.data.messages_index

where size_total_bytes > 4096version

The API version detected at the start of the request_path, or "(none)" if no version was specified. Helpful for tracking deprecated APIs.

Type: varchar

Indexed: yes

Example values:

1

2b

3.0.3

(none)Search by value:

select count(*)

from resurface.data.messages_index

where version = '3.1c'Summarize by top 100 values:

select host, version, count(*) as count

from resurface.data.messages_index

group by host, version order by count desc limit 100Bitmap columns

More than 75 bitmap columns are automatically calculated for every API call. These are included in all indexes to speed query performance when referencing multiple attributes of request and response in a single query. These also help make complex queries more readable.

Type: boolean (no NULLs)

Indexed: yes

Search using single bitmap:

select count(*)

from resurface.data.messages_index

where request_json_presentSearch using multiple bitmaps:

select count(*)

from resurface.data.messages_index

where request_json_present and response_json_presentSearch using multiple bitmaps and other columns:

select count(*)

from resurface.data.messages_index

where request_json_present and response_json_present and risk_score = 'High'Available bitmap columns:

request_info_body_present

request_info_content_type_present

request_info_user_agent_present

request_info_hacking_tool

request_info_content_encoding_present

request_info_content_encoding_compressed

request_info_content_length_present

request_info_transfer_encoding_present

request_info_address_present

request_info_address_private

request_info_web_file

request_info_php_file

request_info_host_present

request_info_host_ip_address

request_info_host_ip_private

request_info_host_domain_name

request_info_host_absolute_name

request_info_host_monitored

request_json_present

request_json_is_array

request_json_is_object

request_json_is_scalar

request_graphql_present

request_graphql_batch

request_graphql_call

request_graphql_has_introspection

request_graphql_has_mutation

request_graphql_has_subscription

request_threat_url_insecure

request_threat_content_type_missing

request_threat_body_malformed

request_threat_url_malformed

request_threat_host_malformed

response_info_body_present

response_info_content_type_present

response_info_content_encoding_present

response_info_content_encoding_compressed

response_info_content_length_present

response_info_transfer_encoding_present

response_info_redirected

response_info_unauthorized

response_info_forbidden

response_info_throttled

response_info_error_client

response_info_error_server

response_json_present

response_json_is_array

response_json_is_object

response_json_is_scalar

response_json_error_present

response_leak_system_header

response_leak_directory_listing

response_leak_source_cgi

response_leak_error_java

response_threat_code_malformed

response_threat_body_malformed

response_threat_body_unexpected

response_threat_content_type_missing

response_threat_header_linebreak

attack_request_method_malformed

attack_request_body_unexpected

attack_request_url_linebreak

attack_request_url_unsafe_chars

attack_request_url_unicode

attack_request_url_working_file

attack_request_url_encoding_abuse

attack_request_header_linebreak

attack_request_multiple_connections

attack_request_param_linebreak

attack_request_content_length_malformed

attack_request_content_type_malformed

attack_request_transfer_encoding_unexpected

attack_application_path_traversal

attack_application_remote_file_inclusion

attack_application_restricted_file

attack_application_param_pollution

attack_application_request_smuggling

attack_application_response_splitting

attack_injection_jndi

attack_injection_ldapBitmap collections

These columns group related bitmaps together, making these easier to query at once. These are hexadecimal values where bitmaps are encoded in powers of two. So these columns are either compared with zero, or by counting the number of non-zero bits set.

Type: integer (32-bit)

Indexed: yes

Search for all attacks:

select count(*)

from resurface.data.messages_index

where bitmap_attack_bits != 0Search for completed attacks:

select count(*)

from resurface.data.messages_index

where bitmap_attack_bits != 0 and response_status = 'Completed'Search for multiple attacks per request:

select count(*)

from resurface.data.messages_index

where bit_count(bitmap_attack_bits, 32) >= 2Available bitmap collections:

bitmap_request_info

bitmap_request_json

bitmap_request_graphql

bitmap_request_pii

bitmap_request_threat

bitmap_response_info

bitmap_response_json

bitmap_response_leak

bitmap_response_pii